8 Bits Per Byte Explained

In the realm of computer science and digital information, the terms “bit” and “byte” are fundamental. These units of measurement are crucial for understanding how data is stored, processed, and transmitted in digital systems. At the heart of this understanding lies the relationship between bits and bytes, with a specific number of bits making up a byte. This article delves into the explanation of why there are 8 bits per byte, exploring the historical context, the technical reasons behind this standard, and the implications of this arrangement in computing and data storage.

Historical Context: The Evolution of Byte Size

The concept of a byte as a unit of digital information originated in the early days of computing. Initially, the term “byte” was used to describe a group of bits used to represent a character of text in the ASCII character set, which required 7 bits to encode. However, as computing technology evolved, the need for a standardized unit of measurement for digital information became apparent. The choice of 8 bits as the standard size for a byte was influenced by several factors, including the design of early computers and the requirements of character encoding systems.

One of the earliest and most influential computers, the System/360 from IBM, played a significant role in establishing the 8-bit byte as a standard. The System/360, introduced in the 1960s, was designed to handle both commercial and scientific applications efficiently. Its architecture used 8-bit bytes for character representation, laying the groundwork for what would become the ubiquitous standard in computing.

Technical Reasons: Why 8 Bits?

The decision to standardize on 8 bits per byte was not arbitrary. Several technical considerations made 8 a more favorable choice than other potential byte sizes:

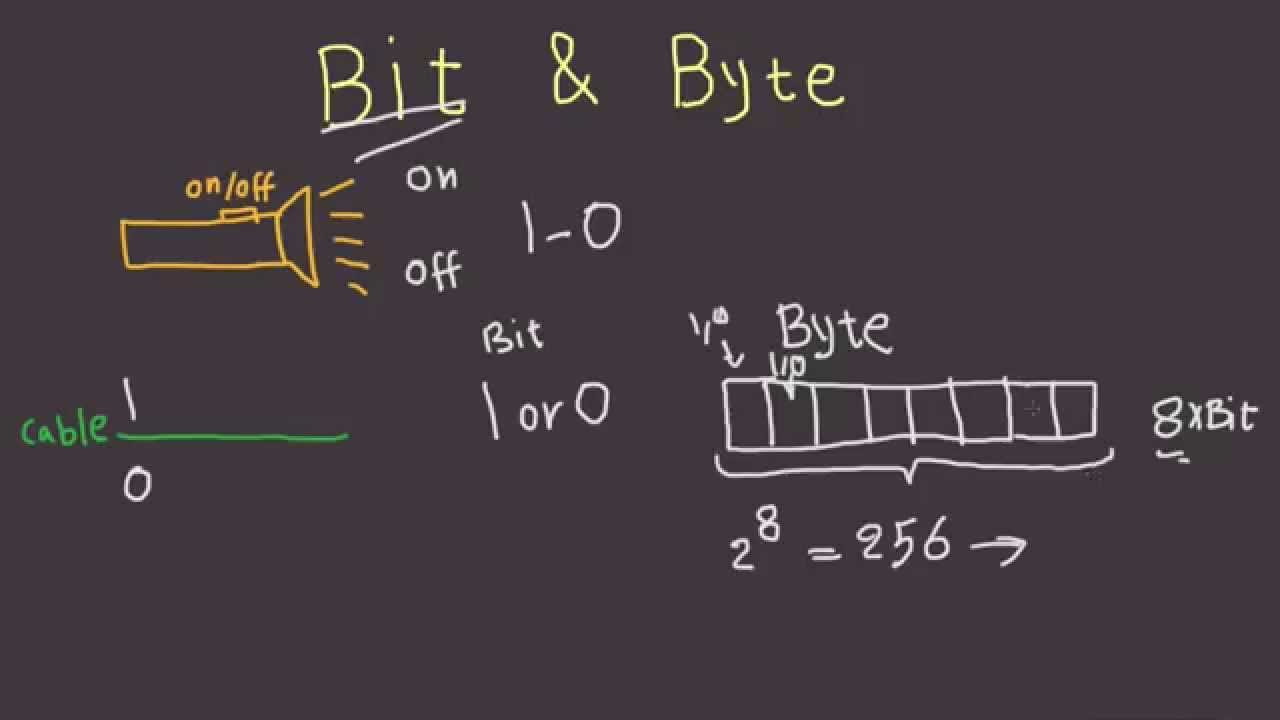

Efficiency in Character Representation: With 8 bits, 256 (2^8) unique characters can be represented. This range is sufficient to cover the standard ASCII character set (which requires 7 bits), with additional space for other characters and symbols. This capacity makes 8-bit bytes versatile for text processing and encoding various languages.

Binary and Hexadecimal Systems: The binary system, which computers use internally, is based on powers of 2. An 8-bit byte aligns perfectly with this system, allowing for efficient data processing and storage. Moreover, the hexadecimal system (base 16), commonly used in programming and data representation, has a direct relationship with binary, making 8-bit bytes convenient for hexadecimal notation.

Data Processing and Transfer: From a data processing standpoint, using 8 bits as a standard unit simplifies operations. Most arithmetic and logical operations can be performed on bytes efficiently, and the use of 8-bit bytes has become fundamental to the design of microprocessors and memory chips.

Memory Addressing: In computer memory, addresses are used to locate specific bytes of data. The choice of 8-bit bytes influences memory addressing schemes. With 8 bits, addressing can be efficiently managed, allowing for a balance between memory size and the complexity of addressing circuits.

Implications in Computing and Data Storage

The standardization of 8 bits per byte has profound implications for computing and data storage:

Data Representation: It sets the foundation for how text, numbers, and other forms of data are represented in computers. The widespread adoption of this standard ensures compatibility across different hardware and software platforms.

Programming: Programming languages and data types (like char, int, float) are influenced by the byte size. Understanding that a byte equals 8 bits is crucial for programmers to manage data effectively and efficiently.

Data Storage and Transfer: The efficiency of data storage devices (hard drives, solid-state drives, flash drives) and data transfer protocols (USB, network protocols) is partly based on the standard byte size. Optimizations in these technologies often rely on the assumption of 8-bit bytes.

Security and Encryption: In cryptography, the 8-bit byte is a basic unit for encryption algorithms. The byte size influences the efficiency and security of data encryption methods, highlighting the importance of this standard in digital security.

Conclusion

The 8-bit byte has become an integral part of the computing landscape, underlying how data is stored, processed, and communicated. From its origins in early computing systems to its modern implications in programming, data storage, and security, the choice of 8 bits per byte reflects a balance between technical efficiency and practical necessity. As technology continues to evolve, with advancements in fields like quantum computing and data storage, the foundational role of the 8-bit byte in digital systems will likely endure, a testament to the foresight and design considerations of the pioneers in computer science.

FAQ Section

What is the origin of the term “byte” in computing?

+The term “byte” was first used to describe a group of bits used to represent a character of text. It originated from the phrase “bite,” referring to the amount of data a computer could “bite off” and process at one time.

Why was 8 bits chosen as the standard size for a byte?

+The choice of 8 bits as the standard byte size was influenced by the need for efficient character representation, compatibility with binary and hexadecimal systems, efficiency in data processing, and simplicity in memory addressing.

How does the 8-bit byte standard affect programming and data types?

+The standardization of 8 bits per byte influences the design of programming languages and data types, ensuring compatibility and efficiency in data representation and manipulation. Programmers must understand this standard to work effectively with data in their programs.

What role does the 8-bit byte play in data encryption and security?

+In cryptography, the 8-bit byte is a basic unit for encryption algorithms. The efficiency and security of data encryption methods are influenced by this standard, making it crucial for digital security applications.

Will the 8-bit byte remain relevant with future advancements in computing technology?

+Despite advancements in technology, the 8-bit byte is likely to remain a foundational element in computing due to its wide adoption and the efficiency it provides in data representation and processing. Its relevance may evolve but will endure as a basic unit of digital information.