Convert Letters Into Numbers

The concept of converting letters into numbers is a fundamental aspect of various encoding schemes and numeric representations. This process can be applied in different ways, depending on the specific requirements or the system being used. One of the most common methods is the alphabetical index, where each letter of the alphabet is assigned a number based on its position in the sequence.

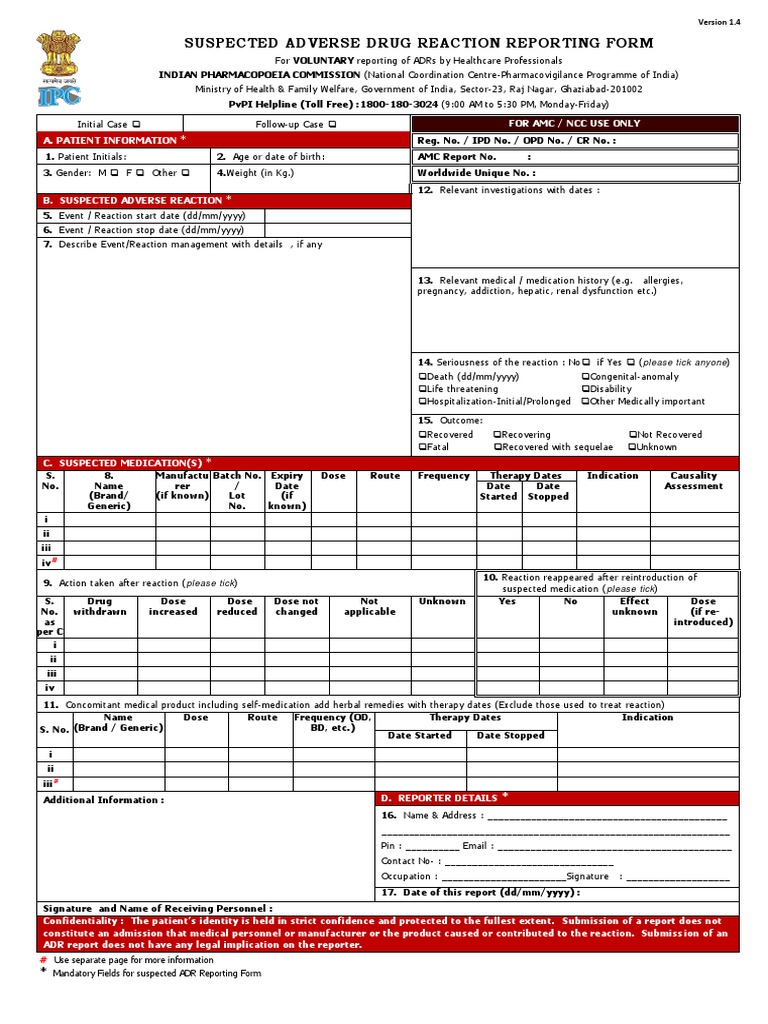

Understanding the Basics

To begin with, let’s explore how the alphabetical index works. In this system, the letter ‘A’ is assigned the number 1, ‘B’ is assigned the number 2, and this pattern continues up to ‘Z’, which is assigned the number 26. This method is straightforward and can be used for basic encoding and decoding purposes.

The alphabetical index is often utilized in everyday applications, such as alphabet-to-number substitution in puzzles or creating simple ciphers for messages. For instance, if you want to convert the word “HELLO” into numbers, you would use the corresponding alphabetical positions: H=8, E=5, L=12, L=12, O=15.

Advanced Encoding Techniques

Beyond the simple alphabetical index, there are more complex methods for converting letters into numbers. These include:

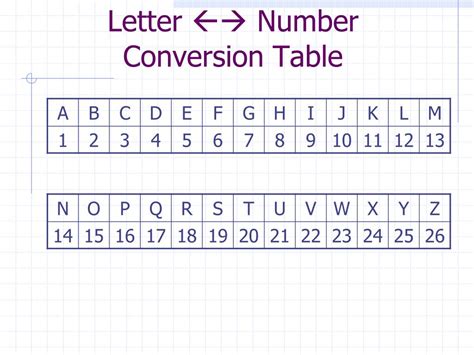

ASCII Code: The American Standard Code for Information Interchange (ASCII) is a character-encoding scheme that assigns unique numbers to characters, including letters, digits, and control characters. For example, the ASCII code for ‘A’ is 65, and ‘a’ is 97. This system is widely used in computer programming and allows for the representation of text in a format that can be processed by machines.

Unicode: Similar to ASCII but more comprehensive, Unicode provides a unique number for every character, symbol, and emoji used in all languages. This has become the standard for encoding characters in modern computing, ensuring that texts from various languages can be represented and exchanged globally.

Practical Applications

The conversion of letters into numbers has numerous practical applications across different fields:

Data Compression and Encryption: In data compression and encryption algorithms, converting text into numerical representations can be a preliminary step. This process helps in reducing the size of the data or securing it by making the original text less recognizable.

Bioinformatics: In the study of genetics, each base in a DNA sequence (A, C, G, T) can be represented by a unique number, facilitating the analysis and comparison of genetic data through computational methods.

Cryptography: Cryptographic techniques often rely on the conversion of plain text into numerical codes. This conversion is crucial for encrypting messages, making them unreadable to unauthorized parties, and for digital signatures that authenticate the sender of a message.

Technical Breakdown

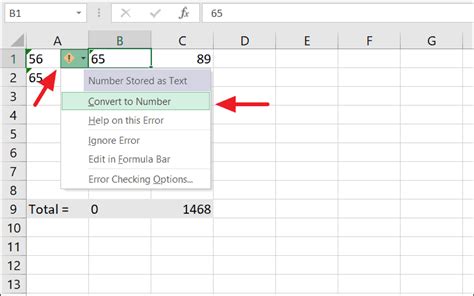

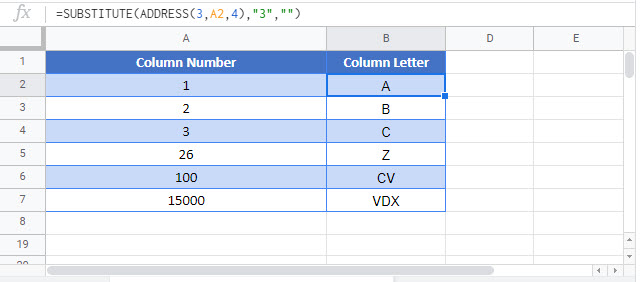

To delve deeper into the technical aspects of converting letters into numbers, consider the following steps involved in a basic encoding process:

- Character Identification: Each character (letter, digit, etc.) in the text to be encoded is identified.

- Assignment of Numeric Value: Based on the chosen encoding scheme (e.g., alphabetical index, ASCII), a numeric value is assigned to each character.

- String Conversion: The text, now represented as a series of numbers, can be further manipulated or transmitted as needed.

This process underscores the flexibility and utility of numeric representations in computing and data analysis.

Decision Framework for Choosing an Encoding Scheme

When deciding on a method for converting letters into numbers, consider the following criteria:

- Purpose of Encoding: Is it for data compression, encryption, or another application? Different purposes may require different encoding schemes.

- Language Support: If the text includes characters from multiple languages, a comprehensive scheme like Unicode might be necessary.

- Computational Efficiency: The choice of encoding can impact the efficiency of subsequent computational processes. Some schemes may offer advantages in terms of processing speed or data size.

Future Trends Projection

As technology advances, the methods for converting letters into numbers will continue to evolve. Emerging trends include:

- Quantum Computing Applications: New encoding schemes may be developed to take advantage of quantum computing’s unique capabilities, potentially leading to more efficient data processing and encryption methods.

- Artificial Intelligence Integration: AI could play a larger role in developing adaptive encoding schemes that learn from the data they process, optimizing encoding for specific tasks or improving security.

Conclusion

The conversion of letters into numbers is a fundamental process with a wide range of applications, from simple puzzles to complex cryptographic protocols. Understanding the different methods and their applications can provide valuable insights into how information is processed and secured in the digital age. As technology continues to advance, the development of new encoding schemes and the refinement of existing ones will remain crucial for enhancing data security, efficiency, and utility.

What is the most common method for converting letters into numbers?

+The most common method is the alphabetical index, where 'A' equals 1, 'B' equals 2, and so on up to 'Z' equaling 26.

What is the difference between ASCII and Unicode?

+ASCII is a character-encoding scheme that assigns numbers to characters, primarily aimed at the English alphabet. Unicode is a more comprehensive scheme that assigns unique numbers to characters, symbols, and emojis from all languages, making it a global standard for character representation.

How is the conversion of letters into numbers used in cryptography?

+In cryptography, converting plain text into numbers is a critical step in encryption and decryption processes. It allows for the application of mathematical algorithms to secure the data, making it unreadable without the appropriate decryption key.

By exploring the various methods and applications of converting letters into numbers, it becomes clear that this process is not only foundational to computer science and data analysis but also continually evolving to meet the demands of emerging technologies and global communication.